library(BMix)

#> Warning: replacing previous import 'cli::num_ansi_colors' by

#> 'crayon::num_ansi_colors' when loading 'BMix'

#> Warning: replacing previous import 'crayon::%+%' by 'ggplot2::%+%' when loading

#> 'BMix'

#> ✔ Loading BMix, 'Binomial and Beta-Binomial univariate mixtures'. Support : <https://caravagnalab.github.io/BMix/>Mixture models with BMix

Simulated data

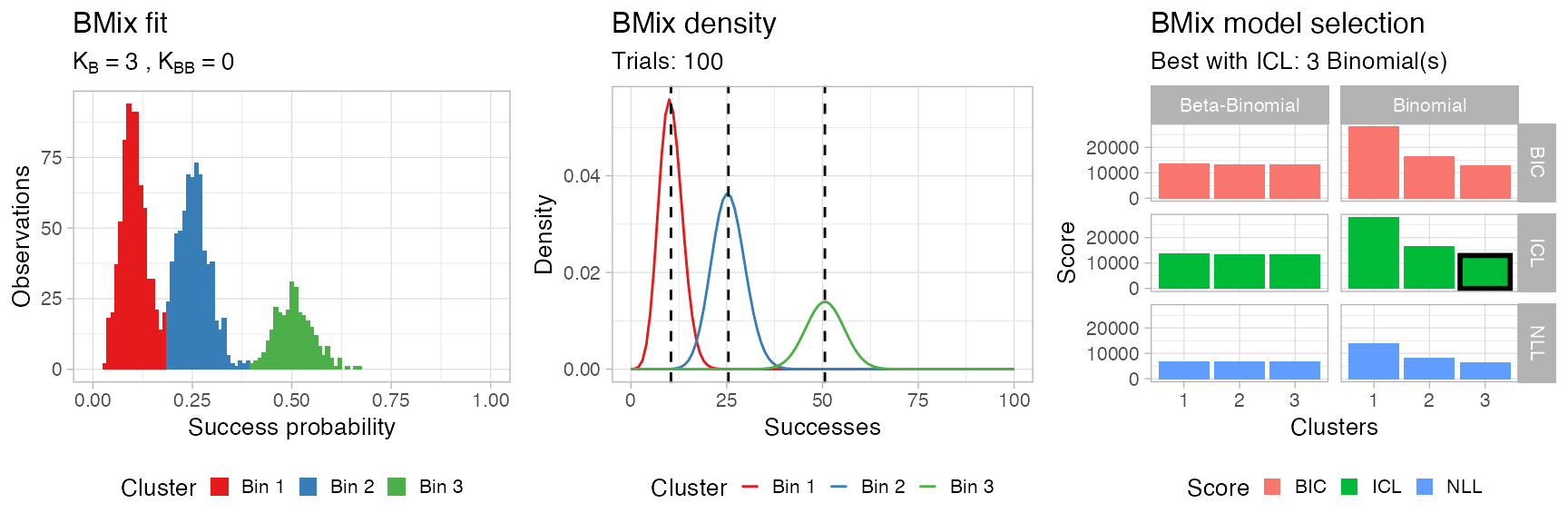

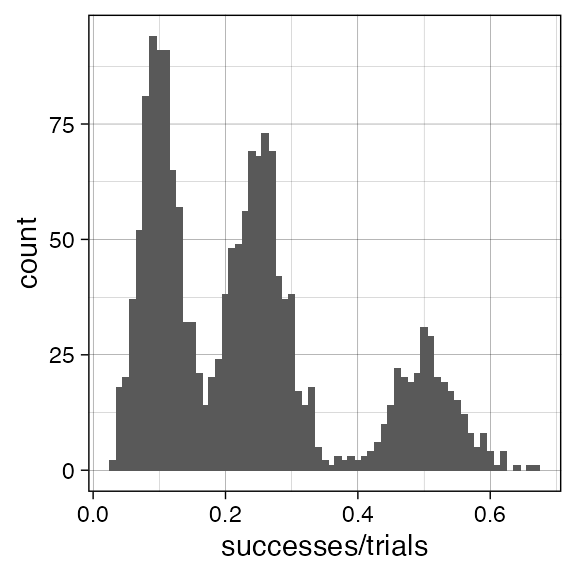

We generate some simple data obtained from \(k=3\) Binomial mixtures with Binomial probability \(p\in\{ 0.5, 0.25, 0.1\}\), drawing \(300\), \(700\) and \(700\) samples each. The number of trials is fixed to \(100\).

data = data.frame(

successes = c(

rbinom(300, 100, .5), # First component - 300 points, peak at 0.5

rbinom(700, 100, .25), # Second component - 700 points, peak at 0.25

rbinom(700, 100, .1)), # Third component - 700 points, peak at 0.1

trials = 100)

print(head(data))

#> successes trials

#> 1 54 100

#> 2 43 100

#> 3 59 100

#> 4 51 100

#> 5 47 100

#> 6 46 100

require(ggplot2)

#> Loading required package: ggplot2

ggplot(data, aes(successes/trials)) + geom_histogram(binwidth = 0.01) + theme_linedraw()

Binomial mixtures

Fitting the data

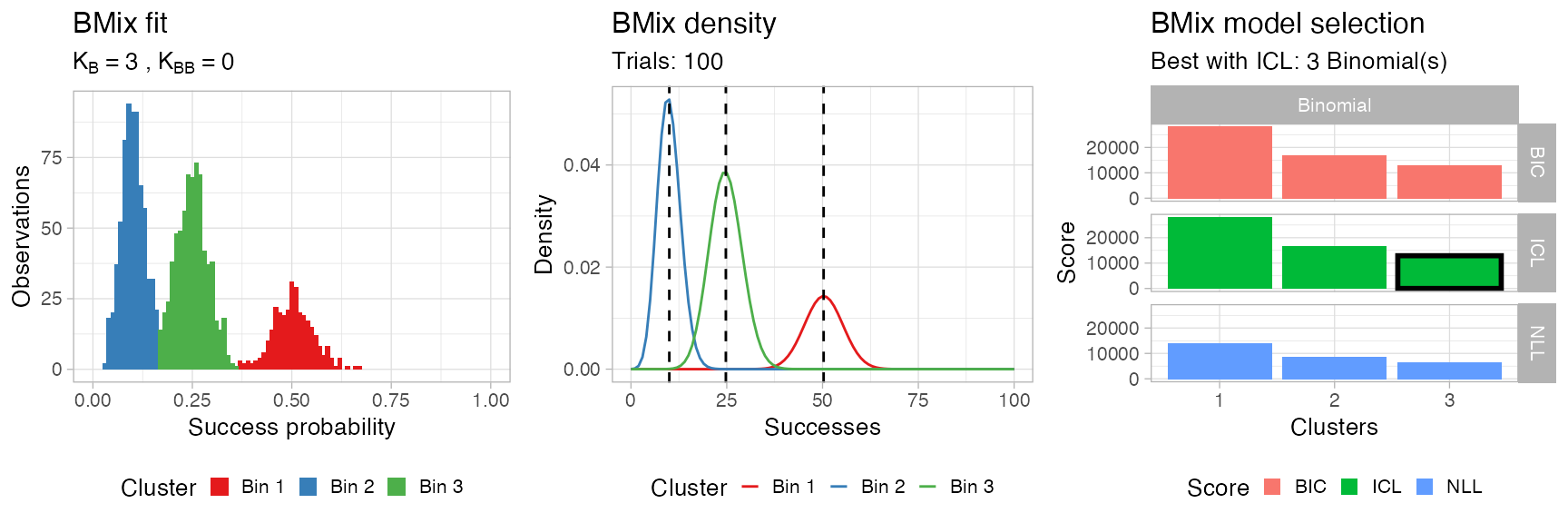

Fitting is done with function bmixfit, the default parameters test a number of configurations of Binomial components, but sets to \(0\) the number of Beta-Binomial components.

# Default parameters

x = bmixfit(data, K.Binomials = 1:3, K.BetaBinomials = 0)

#>

#> ── BMix fit ────────────────────────────────────────────────────────────────────

#> ℹ Binomials k_B = 1, 2, and 3, Beta-Binomials k_BB = 0; 6 fits to run.

#> ℹ Bmix best fit completed in 0.03 mins

#> ── [ BMix ] My BMix model n = 1700 with k = 3 component(s) (3 + 0) ─────────────

#> • Clusters: π = 42% [Bin 3], 40% [Bin 2], and 18% [Bin 1], with π > 0.

#> • Binomial Bin 1 with mean = 0.502830579467926.

#> • Binomial Bin 2 with mean = 0.100037472839639.

#> • Binomial Bin 3 with mean = 0.247624915318537.

#> ℹ Score (model selection): ICL = 12903.68.

# Maybe one could compare x to this

# y = bmixfit(data, K.Binomials = 0, K.BetaBinomials = 1:2)The fit is an object of class bmix which has S3 methods available.

print(x)

#> ── [ BMix ] My BMix model n = 1700 with k = 3 component(s) (3 + 0) ─────────────

#> • Clusters: π = 42% [Bin 3], 40% [Bin 2], and 18% [Bin 1], with π > 0.

#> • Binomial Bin 1 with mean = 0.502830579467926.

#> • Binomial Bin 2 with mean = 0.100037472839639.

#> • Binomial Bin 3 with mean = 0.247624915318537.

#> ℹ Score (model selection): ICL = 12903.68.Note: The input data is not stored inside the fit, you have keep it and pass it to plotting functions. Keep it consistent, the package is not doing any checks about the input.

You have getters to access the clusters (as tibble) and the fit parameters.

# Augment data with cluster labels and latent variables

Clusters(x, data)

#> # A tibble: 1,700 x 6

#> successes trials cluster `Bin 1` `Bin 2` `Bin 3`

#> <int> <dbl> <chr> <dbl> <dbl> <dbl>

#> 1 54 100 Bin 1 1.00 1.32e-25 2.55e- 9

#> 2 43 100 Bin 1 0.999 1.54e-15 5.31e- 4

#> 3 59 100 Bin 1 1.00 3.49e-30 9.73e-12

#> 4 51 100 Bin 1 1.00 7.33e-23 7.19e- 8

#> 5 47 100 Bin 1 1.00 3.36e-19 6.18e- 6

#> 6 46 100 Bin 1 1.00 2.77e-18 1.88e- 5

#> 7 49 100 Bin 1 1.00 4.97e-21 6.66e- 7

#> 8 52 100 Bin 1 1.00 8.91e-24 2.36e- 8

#> 9 46 100 Bin 1 1.00 2.77e-18 1.88e- 5

#> 10 53 100 Bin 1 1.00 1.08e-24 7.75e- 9

#> # … with 1,690 more rows

# Obtain for every fit component the mean and its overdispersion.

# Binomial components have 0 overdispersion by definition.

Parameters(x)

#> # A tibble: 3 x 3

#> cluster mean overdispersion

#> <chr> <dbl> <dbl>

#> 1 Bin 1 0.503 0

#> 2 Bin 2 0.100 0

#> 3 Bin 3 0.248 0Plotting the fits

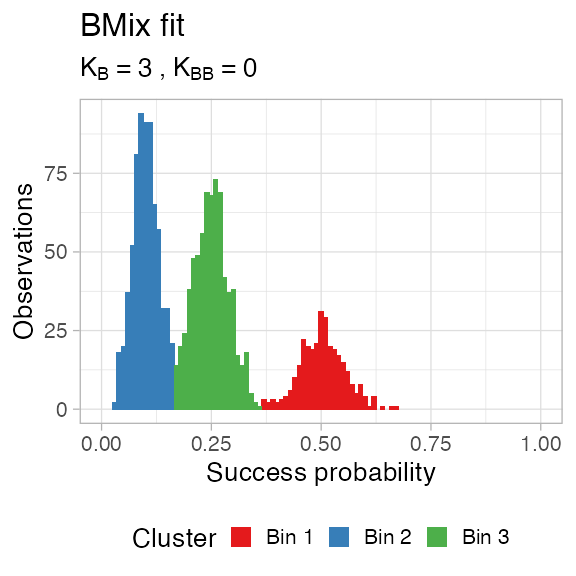

You can plot the clustering assignments (hard clustering).

plot_clusters(x, data)

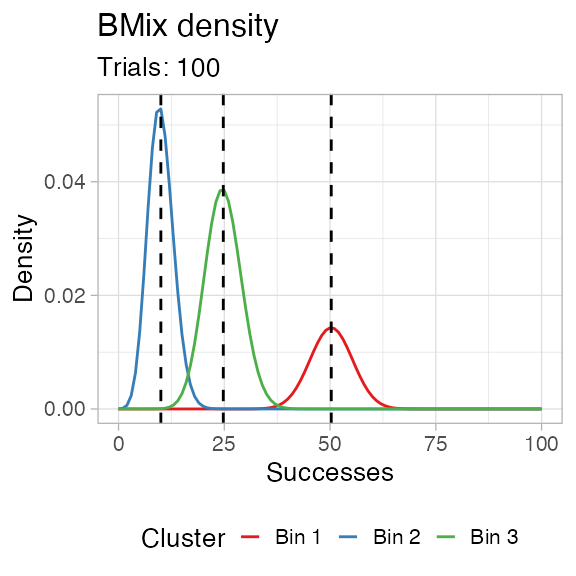

You can plot the density, in frequency space. For this a number of trials needs to be fixed; by default BMix takes the median number of trials in the input data, but you can decide to use any other interger number.

plot_density(x, data)

You can visualise the result of the model selection grid as a heatmap; the best model is the one that minimizes the chosen score, which is by default the Integrated Classification Likelihood, an extension of the Bayesian Information Criterion that accounts for the entropy of the latent variables.

Then plot assembles all these plots using the cowplot package.

BMix::plot.bmix(x, data)

Beta-Binomial mixtures

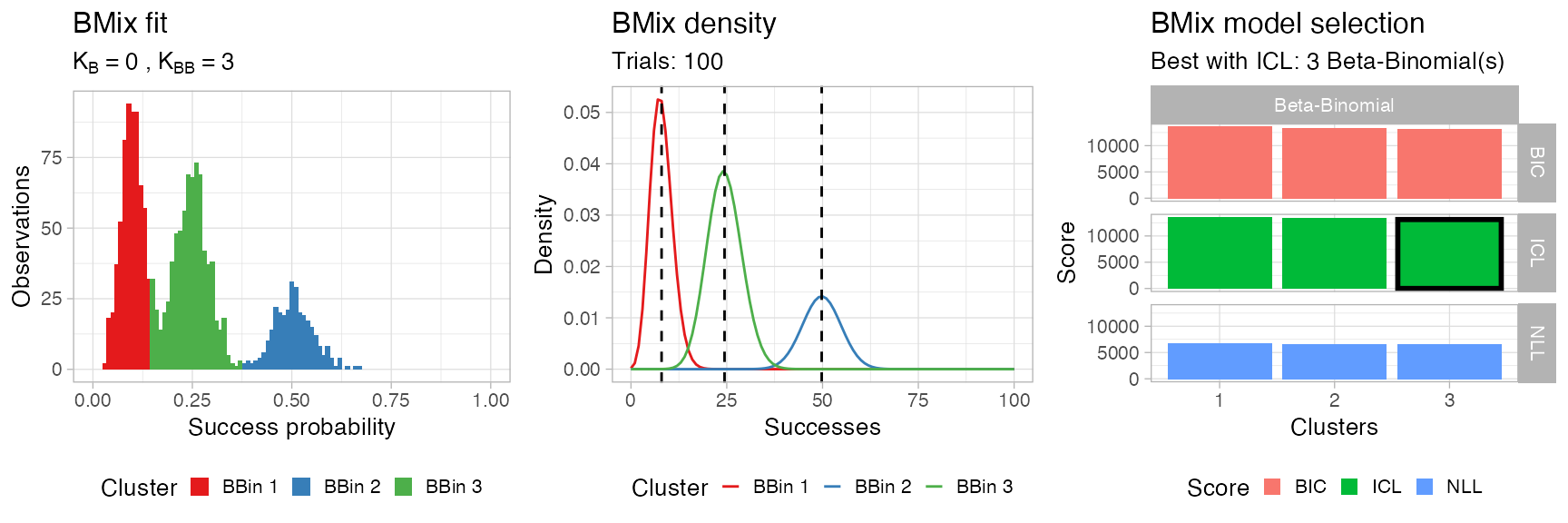

If you want to test the same model with only Beta-Binomial components you can run function bmixfit as follows.

# Custom parameters

x = bmixfit(data,

K.Binomials = 0,

K.BetaBinomials = 1:3)

#>

#> ── BMix fit ────────────────────────────────────────────────────────────────────

#> ℹ Binomials k_B = 0, Beta-Binomials k_BB = 1, 2, and 3; 6 fits to run.

#> ℹ Bmix best fit completed in 0.18 mins

#> ── [ BMix ] My BMix model n = 1700 with k = 3 component(s) (0 + 3) ─────────────

#> • Clusters: π = 44% [BBin 3], 38% [BBin 1], and 18% [BBin 2], with π > 0.

#> ℹ Score (model selection): ICL = 13126.36.

# Show outputs

print(x)

#> ── [ BMix ] My BMix model n = 1700 with k = 3 component(s) (0 + 3) ─────────────

#> • Clusters: π = 44% [BBin 3], 38% [BBin 1], and 18% [BBin 2], with π > 0.

#> ℹ Score (model selection): ICL = 13126.36.

BMix::plot.bmix(x, data)

Comparing mixtures

You can compare models with both type of components.

# Custom parameters

x = bmixfit(data,

K.Binomials = 0:3,

K.BetaBinomials = 0:3)

#>

#> ── BMix fit ────────────────────────────────────────────────────────────────────

#> ℹ Binomials k_B = 0, 1, 2, and 3, Beta-Binomials k_BB = 0, 1, 2, and 3; 12 fits to run.

#> ℹ Bmix best fit completed in 0.2 mins

#> ── [ BMix ] My BMix model n = 1700 with k = 3 component(s) (3 + 0) ─────────────

#> • Clusters: π = 43% [Bin 1], 40% [Bin 2], and 18% [Bin 3], with π > 0.

#> • Binomial Bin 1 with mean = 0.104387833007354.

#> • Binomial Bin 2 with mean = 0.254352355491927.

#> • Binomial Bin 3 with mean = 0.506102399197554.

#> ℹ Score (model selection): ICL = 12967.51.

# Show outputs

print(x)

#> ── [ BMix ] My BMix model n = 1700 with k = 3 component(s) (3 + 0) ─────────────

#> • Clusters: π = 43% [Bin 1], 40% [Bin 2], and 18% [Bin 3], with π > 0.

#> • Binomial Bin 1 with mean = 0.104387833007354.

#> • Binomial Bin 2 with mean = 0.254352355491927.

#> • Binomial Bin 3 with mean = 0.506102399197554.

#> ℹ Score (model selection): ICL = 12967.51.

BMix::plot.bmix(x, data)